2005: Web-to-Wireless MMO Technology

In 2004 I had designed and created the http-based multiplayer system that enables mobile phone owners to play peer-to-peer games thru a central node such as NxN game-play is possible.

I choose to address only HTTP connection because at the time, it was the only connection available to ALL phone models.

*In 2005, I proposed to the company to push the envelope one step further, and I've designed and created a second generation system that will enable both PC players and mobile phone owners to play altogether on the same MMO; that's what we call now "web-to-wireless" gaming

Defining the actual objectives

Market segmentation is probably one of the most challenging issue to address when talking about wireless gaming development.

Though more than 800 millions brand-new handsets have been sold during 2005 — probably 1 billion will in 2006 — the installed based still need to be addressed in order to maximize the

Another issue of importance is certainly the notion of scalability: this is the term to define the difference nature and performance between many different handset models. Some handset have small screen, others larger ones, some has some 3D capability, some support TCP connection others don't, etc. It is then important to categorize our game feature in order to know what features are required by the set of targeted handsets.

Keeping that in mind, I added three goals for the project:

* Cross visual representation: Enable both 3D and 2D game play of the same game: as most of the mobile handset models only supports 2D display, it was a MUST to support those in addition to 3D capable models.

* Cross Network, cross operators: Enable 3G networks but also GPRS. Despite their very different performances, we should be able to capture as many as possible model handsets. We also want that users play altogether regardless of their operator.

* Cross Platform: Enable both personal computers (internet) and mobile phones (radio network) to play altogether, seamlessly.

Building the 3D world and the PC engine

The game implements a catch-the-flag game play. As a player, you will be provided with a car, appearing somewhere in the world. Your goal is to catch the flag — a cup in the final game — and keep it as long as possible,

avoiding any chasing cars trying to steal it.

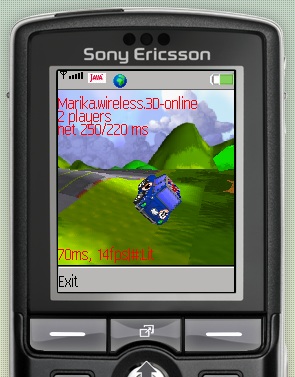

PC users — take PC as a general term as we support any platform running Java™ — will benefit from realtime 3D graphics as long as 3D capable mobile such as SonyEricsson JP-6 phones for instance.

Non 3D capable phones will implement the 2D view of the same game.

3D players will benefit from 3D perspective view of the scene in a third-view camera, while 2D players will benefit from a orthogonal, top-view camera.

The world database is designed in such way that it must provide all the information in an efficient way to all type of clients being 2D or 3D for PC and 3D for mobiles.

MMO world are very large and cannot be held in memory as a whole: for this reason, it must support streaming, that is required parts are being downloaded from servers on-demand.

The world is made of a grid of square modules: Each module is tiled-based in the x, z plane — 64×64 quads —, the altitude y is taken from a height field image.

As the world is potentially very large, a tessellation system generate primitives on-demand,

The collision data-structure is similar to the one for the geometry — with a LOD system — and are sub-sampled on-demand, JIT.

Obviously, a cache system assists the tessellation and sub-sampling processes in order to maximize memory cache system and minimize computation.

When possible

otherwise I use

Extreme programming, the best way to organize yourself or a team, by far

As you may already know, I have always used extreme programming to develop my softwares. And once again, I defined a series of micro milestones throughout the development stage.

This way, every week I can show off or demo the technology to the numerous visitor I daily have at my desk! In addition, fitting my own weekly objective, I can demonstrate new features or improvement visually every week.

I started off with existing assets, my Mario™ database, as place holders. They are 2D sprite projected on a transparent cube, as you can see on the first screenshot on the right.

For the background I firstly used a zero-height field which makes my ground looks like a pure plane. But of course, it's made of 4096 quads in order to keep with the real speed performance.

Each square is mapped with a tile taken from a relatively "large" tile map, each quad can have any rotation or flipping operation.

Network infrastructure

Designing the network protocol and developing an implementation

Based on the experience I had for MMO design — and mainly on the previous system — I decided to develop a brand-new protocol that would have the following features:

* high performance: we need to handle hundreds to thousands simultaneous connected people

* high extensibility

* built-in diagnostic system: the system must enable any human or software administrator to detect and prevent drop of performances — due to overload — and future failure such as a hub or bridge shutdown.

* built-in auto-optimization and auto-fix system: the system must auto-regulate bandwidth usage and have fallback systems in order to overcome any failure automatically

Additionally, the system must nicely handle software cycles as follows:

* automatic software update, without requiring service shutdown,

* game data deployment, still without requiring any service cut.

All these requirements have lead to the creation of both:

* the SLLTP protocol: data packet binary protocol with built-in routing functionality. It is well suited for wireless network as long as internet or lan connections.

* a non-concentrated system architecture where each server module has it's own independency, with a software buses that enable communication paths to be created on-demand.

Server modules have built-in hot-upgradability functionality: if an upgrade is available, a module can upgrade itself in a snap, without requiring the infrastructure to stop. Once up and running again, it connects to the bus and resumes.

Infrastructure features

The SLLTP protocol enables for administration communication between module. A set of control messages can go through the MMO in order to demand things to be done to a set of others module or a given target.

This is the base for diagnostic: administrators can connect from one point to another one, including game clients running on an handset. You can for instance require a module to exports its internal terminal to your screen...

This human communication goes thru the chat console from a game client or a server module front-end.

A large set of commands is available:

* whosthere, whois, shutdown,

* ping, traceroute, uptime,

* export console, export graphics,

* spawnbot, etc.

Load balancing, by design

When a client or a server module tries to connect to the infrastructure, it is first routed to authentication servers, in order to determine rights and access.

Once it is granted, the system requires the load balancing server to chose an IP and a port to an available machine that could take the connection: the IP and port is returned to the client or server module along with a temporarily session ID.

Upon reception, the module disconnects and reconnects to the given IP and port and authenticate itself with the session ID before the session expires.

This solves most of the load balancing issue.

Hiding latency with the protocol

Solving car/car collisions throughout the network

One of the biggest issue in MMO is to synchronize event over the network in a manner that it is unique for all clients and server modules.

In my case, I wanted any player to steal the flag that an opponent could hold.

Unfortunately, instant notification does not exist other a network because the time it takes to transports a piece of information — the latency — is non null. As a consequence detecting a collision in a module at time t are not likely to be detected at the exact same time in an other module that is supposed to handle the exact same situation.

A consequence of that could be a terrible switching of the flag between the two cars during the short period of time where they collide each other.

I implemented a notion of hierarchy of message that can be sorted by any module attached to the bus. This solves most of the difficulties.

Reducing the effect of latency

Latency causes the notion of simultaneous event being a non-sense.

Though you can have different level of performances which depends on the nature of the technology behind, there no miracle technique you can look forward...

Wireless networks perform particularly bad in this area: UMTS can give you 600 ms latency while GPRS give you 100 to 1200 ms !

Hiding latency is accomplished by message transaction: a kind of finite-state-machine has been successfully implemented and solve almost all issues nicely for this kind of games.

How to address very high number of simultaneous connections

Having hundreds of connections at the same time yields thousands of messages throughout the buses in the infrastructure.

Bandwidth usage becomes very high and we observe network jam. Server modules — especially the hub - are then saturated, yielding continuously increasing latency.

The phenomenon yields to overloaded CPUs and message saturation, blocking the whole infrastructure.

In order to limit that disastrous effect, I've implemented three main solutions:

* Buses and modules continuously control the effective bandwidth they have access to. When performances get down, they limit outgoing message emission

* The SLLTP protocol has a built-in support for "importance of message" which enable the infrastructure to reorder message delivery

* Hubs implement a saturation mechanism: when a hub detects that the bus is going to overload, it decides to trash less important messages in order to de-saturate connections. The rate of trashing depends on the actual realtime jam situation.

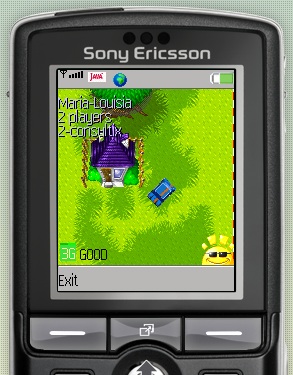

2D Wireless Client

The final wireless 2D version is using two sets of graphics that you can choose from the starting menu. 2D graphics have been designed from real 3D mesh that we pre rendered in 3DS Max. The resulting images are cleaned on a pixel by pixel manually.

Regarding the perception of quality the game gave to players, I noticed that lags incurred by dramatic varying network conditions was really damaging the perception of fluidity.

For this reason I have created a set of funny icons that I've asked my artist to realize. Each icon symbolize the network conditions, a system very comparable to the weather condition iconography.

Should the network condition change at any moment, a sliding icon replaces the former one to indicate the new conditions, as shown below where you can see the "sunny face" which denotes the "good, or no problem" state.

I also innovated in the field of car control. Most car games — if not all — choose a relative control system, where you are place "in" the car: you must press the left arrow to go on the left, regardless of the actual orientation of the car on the screen. Maintaining the left key pressed makes you draw a circle.

Rather, I've programmed an absolute system, where if you press left, you go on the left of the screen. Unlike the previous system, maintaining the left key pressed makes you draw a line in fine.

Clearly, this system yelds superior playability, and moreover, is better adapted to casual players because it is natural.

3D Wireless Client

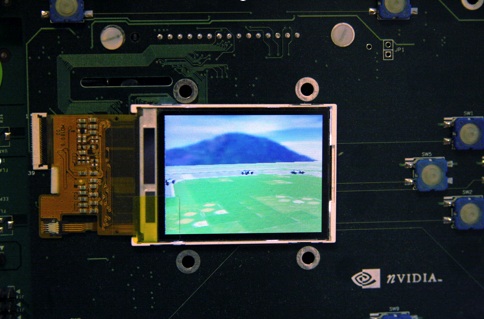

NVidia GoForce 3D Chip

For this specific part of the project I partner-shipped with NVidia in order to have early access to their forthcoming GoForce 4500/4800 3D graphics hardware accelerator.

In June 2005, I received an electronic board featuring a Motorola phone running under a linux kernel and featuring a GoForce 4500 chip and equipped with a QVGA color screen.

The product has to be connected to a PC running a 7.3 red hat in order to have access to network and disks.

An ARM C compiler has to be used to produce OpengGL ES 1.0 C code; unfortunately, at that time no VM with JSR-184 was available. Few weeks later, NVidia sent me a C upgrade implementing OpenGL ES 1.1 and giving access to shaders which I experimented as well.

I created a stripped down port of my J2se code, and in a couple of days, I had 32 cars running in the world. I could experiment the hardware and benchmark real-world performances.

JSR-184 J2me version for SonyEricsson W800i

By the end of the year, I received a pre-version of the W800i which is equipped with the same GoForce 4500 chip.

Unlike the board, the handset is running a Java Virtual Machine and implements JSR-184.

After many experiments, I was convinced that many facts was dramatically impacting the performances I had on the board with straight C:

* First, Java is a source of performance losses. Ease of use, flexibility and its "program once, run anywhere" motto has a price.

* Second, most of the phones have poor memory access throughput, and this mobile — though comparing well against others existing handset — do not avoid this penalty. Note that this handset implements JP-6, the SonyEricsson Java Platform. By the time of writing — June 24th 2006 — SonyEricsson just released the new JP-7 platform which dramatically improve this area.

3GSM 2006, Barcelona: Putting all together

On April 2006 a Barcelona, MobileScope/In-Fusio demonstrated Project X on its booth; people were invited to play a catch-the-flag persistent game with either their mobile phones or the PCs.